03 Apr 2024

This series of blog posts provides a guided walk-through for using different smart contract security tools. These articles are for readers who wish to level-up their Ethereum and solidity security skills. Even if you’re familiar with these tools, these guides may reveal some hidden features.

Motivation

If you’ve heard about blockchain and smart contract security, you’ve also heard about the massive crypto hacks. There is currently a severe shortage of security know-how in the smart contract ecosystem, and the demand for these skills is high. yAcademy strives to grow security talent in the blockchain ecosystem, and sharing knowledge of common building blocks is an important piece of that. While the latest major hacks are (usually) beyond the ability of automated security tools, it’s important that good how-to guides for current security tools exist to make it easier to onboard people new to smart contract security. And if we want smart contract security tooling to improve, which would be very helpful given the security skillset shortage, the best way to incentive tool improvement is to use and show appreciation for what already exists. Or even better, after you get familiar with these tools, you can start contributing to the tools, upgrading them, or building new improved tools. While it is true that manual code reviews are mostly what the top smart contract auditing firms get paid for, those same auditors run these tools to catch low-hanging fruit.

The obvious question now is: what’s in the engn33r’s toolbox? These tools are what I think are the most useful and most common tools used by security people in the Ethereum ecosystem. If the tool listed below doesn’t have a hyperlink, the article is still being written - check again soon!

- Slither

- Echidna

- Mythril

- VS Code Extensions

- Etherscan

- Seth

- Tenderly

- Misc. web tools (ethtx.info, contract-library.com, etc.)

28 Sep 2020

The Advanced Web Security series looks beyond the OWASP Top 10 web application security risks. For the full list of articles in this series, visit this page.

Today’s web applications and systems are more complex than ever before. While servers should always send data to users over HTTPS, the usage of HTTPS does not guarantee that the data sent from the web server will be received only by the correct recipient. This may be surprising to hear, since in many minds HTTPS equals a green lock symbol which means everything is secure, right? Research from the past year shows otherwise! Request smuggling is a technique that allows a malicious user to receive server responses intended for other users of a web application. This type of attack makes it ever more important that web applications are designed to NOT send sensitive account data to the user (such as the user’s plaintext password, government ID information, payment details, etc.) and reinforces the importance of defense-in-depth. To reiterate, if a web application transmits sensitive account data back to the user, there is an opportunity for a high impact request smuggling attack - HTTPS will not provide protection against this risk.

What is request smuggling?

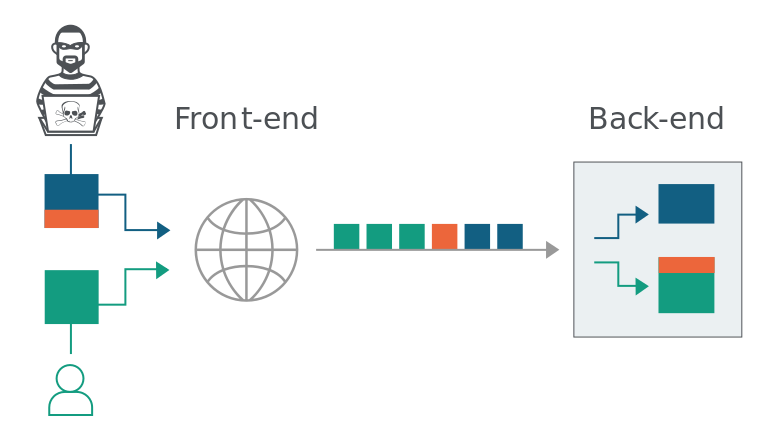

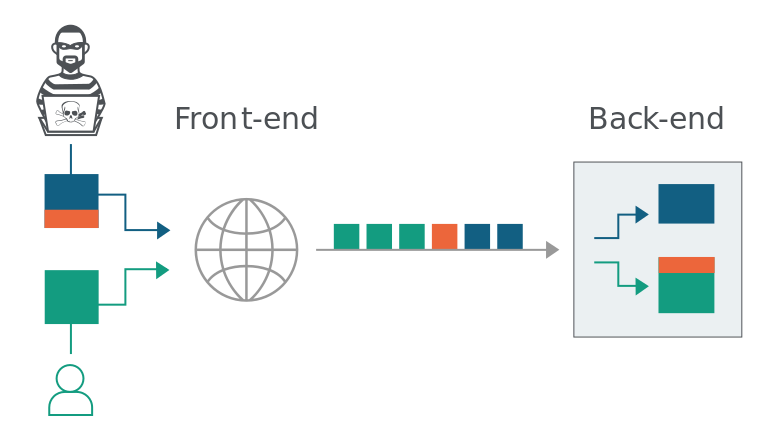

Every so often, a new attack surface is investigated in the world of web applications, and the result is that high impact vulnerabilities are discovered to impact a large number of websites. In my opinion, this was previously the case with SSRF (server-side request forgery) a few years ago, and I would argue that request smuggling did this in 2019. While James Kettle, the researcher who popularized the vulnerability, humbly decided to remove his research from eligibility in the main PortSwigger Top 10 web hacking techniques of 2019 competition, request smuggling (specifically the HTTP Desync attack variant) easily won the “community favourite” category, indicating that the security community thinks of this attack vector as an important research development. The way that request smuggling works requires thinking about a web application as more than a single web server. Instead, the results of a web application are the sum of all the servers in between your browser and the backend server, and this includes the frontend server (i.e. reverse proxy). Request smuggling targets the fact that the front-end and back-end of the application both handle HTTP requests from users, but it is possible that they handle the requests in slightly different ways due to implementation differences in different code bases. Specifically, request smuggling exploits a difference in how HTTP requests end, which varies based on the “Content-Length” header and the “Transfer-Encoding” header. Due to this issue, we can encounter a scenario like the one shown in the diagram below (borrowed from the request smuggling section of the excellent PortSwigger Web Academy). At a high level, a possible result of this vulnerability is that the backend server may return the response for a web application victim user to a malicious user, potentially exposing details about the victim user to the malicious user.

How does request smuggling really work under the hood?

I can’t claim to understand this attack better than the PortSwigger team that did this research in the first place, and therefore will defer to PortSwigger’s explanation for the deeper details of this attack. At the same time, I can try to provide a briefer explanation that may approach the topic from a different angle.

A web application’s frontend frequently takes incoming requests from users and forwards them to the backend over a single TLS connection. The frontend and backend usually are running different software, and certain edge cases of the HTTP/1.1 protocol are implemented differently in different software packages. A malicious user can exploit these different implementations by crafting a HTTP request that is understood one way by the frontend server and a second way by the backend server. Usually this is done by creating a HTTP request with a request body that contains a header that looks like the start of another HTTP request. The server might take this header information and append it to the following HTTP request (one that the malicious user did not send), and effectively inserting a request into another user’s HTTP session (and therefore unaffected by HTTPS security, because this occurs on the server side after HTTP requests are decrypted). While HTTP request smuggling is usually performed using a POST request, since the body of the POST request is used in the exploit process, this detailed request smuggling writeup from the Knownsec 404 team shows a GET request can also perform request smuggling. While it might be possible to consider request smuggling on its own a form of reverse proxy bypass, the impact changes a lot when the server is using a web cache, has internal APIs, or provides other targets.

How can a penetration tester exploit request smuggling?

There are several options to exploit request smuggling, but if you’re not in a rush, I am currently in favor of exploiting this issue manually, at least at first. This provides a deeper understanding of how the server counts the Content-Length value, and how to build a HTTP request in the exact manner needed to exploit the server in the manner you intend. One tip based on a mistake I overcame: when modifying the Content Length, a newline counts as 2 characters (/r/n). However, once you grasp how this vulnerability works, you no doubt will want to speed up the process. Burp Suite has a request smuggler extension, or you can use the turbo intruder Burp extension for more heavy duty testing. Outside of Burp Suite, this tool named “smuggler” is my preferred choice, and I don’t think there are currently many competing off-the-shelf tools. It may be possible to use curl or ncat based on this follow-up comment from James Kettle in a bug bounty report to the DoD, but this doesn’t appear to be a common method (this post was the only instance I found of using ncat for request smuggling).

How to defend against request smuggling?

The easiest approach for small-scale web applications is to remove any CDN, reverse proxy, or load balancers! However, such a simplifying measure is impractical for larger web applications, who rely on these added complexities for increased performance, so the following ideas are commonly considered other mitigation strategies:

- Use HTTP/2 for connections to the backend: the root of the request smuggling vulnerability is ambiguity in HTTP/1.1 RFCs. The newer HTTP/2 protocol resolved the problematic ambiguities.

- Reconfigure frontend/backend communication to avoid connection reuse: Request smuggling relies on confusion about the division of multiple HTTP requests in a single connection. If these HTTP requests are each in a separate connection, there would be no confusion about where each HTTP request starts and ends.

- Use frontend and backend software that rely on the same HTTP request parsing: Request smuggling occurs because different software implementations in the frontend and backend handle ambiguous HTTP/1.1 edge cases differently. If the frontend and backend handle each HTTP request in the same manner, either because both the frontend and backend use the same software or close examination reveals consistent handling of the relevant headers in both pieces of software, then the frontend and backend will not handle HTTP requests in different ways.

References

James Kettle’s excellent research on the topic: https://portswigger.net/research/http-desync-attacks-request-smuggling-reborn

Portswigger Web Academy Request Smuggling labs: https://portswigger.net/web-security/request-smuggling

defparam’s Smuggler standalone Python tool: https://github.com/defparam/smuggler

Portswigger’s request smuggling Burp extension: https://github.com/PortSwigger/http-request-smuggler

14 Sep 2020

I’m on vacation for the week, and while I considered posting a technical article as usual, I decided this would be good opportunity to highlight the importance of taking a break every now and then. For some of you this news will come as no surprise, but there may be others who are more focused on productivity and think “sleep is for the weak!” or “I’ll rest when I’m dead!”. For better or worse, I sometimes lean towards the latter category, so this post is for others who have difficulty with the choice between taking a break and maintaining an unbroken streak of productivity. I’m not suggesting long vacations are the most productive approach to every goal, but I would advocate that taking a rest can be extremely helpful when done right. By using the weasel words “when done right”, I admit the implementation details (frequency and length) of how breaks are taken will vary from one person to another, but the reasons why breaks should be taken is more consistent. So I am not here to advocate how one should take breaks in detail - I am only here to remind you to take the occasional break.

Perhaps the best reason to take breaks is to avoid burnout, which has gotten a bit of press in security. If you are unable to avoid burnout, your productivity will take a substantial hit regardless of whether you take a break or not, and no doubt you will improve your mindset if you choose to take breaks rather than take the burnout route. But a second reason where breaks can be useful is in your daily routine itself. One example would be the Pomodoro technique, which I have found useful when I am focused on a single topic or project for large chunks of time. If you wish to try out the Pomodoro technique, there are plenty of mobile apps and websites that offer a free Pomodoro timer.

Another approach to breaks in a daily routine is to focus your energy during your most productive hours of the day, leaving the less productive parts of the day open for breaks (such as meals, etc.). Everyone’s internal clock operates a bit differently, and because of this we have different times during the day that we are more likely to do our best work. We hear frequently of people working 6 days a week or other stories of intense struggle, but we hear less about how efficiently some people use just a few hours per day to accomplish great things. I speculate this is because it is easier and more common to be productive than to be effective. To apply this second approach, I would suggest recording your work output in half-hour chunks for 1-3 weeks to determine what parts of the day you are more productive, and review Peter Drucker’s timeless book “The Effective Executive” to go further and remove unnecessary tasks from your work or person to-do list.

Now if only my future self would heed these words, since I did not take a break from writing a blog post… Wisdom is the ability to follow your own advice!

31 Aug 2020

Android application security is like the land that time forgot. Mobile security is behind the times - issues like hard-coded sensitive data, unencrypted transmission of sensitive data, and other simple vulnerabilities abound. Billions of devices run Android applications, and only a handful of security researchers are seriously focused on analyzing them. Meanwhile, there’s a wild bonanza in web application security, where some of the cutting edge research looks like the equivalent of a graduate level thesis! Why does reality look like this? I don’t know, but I’m convinced that Android app security has been underappreciated and should see a surge of interest soon. Let me share what has convinced me to focus my energy on Android security.

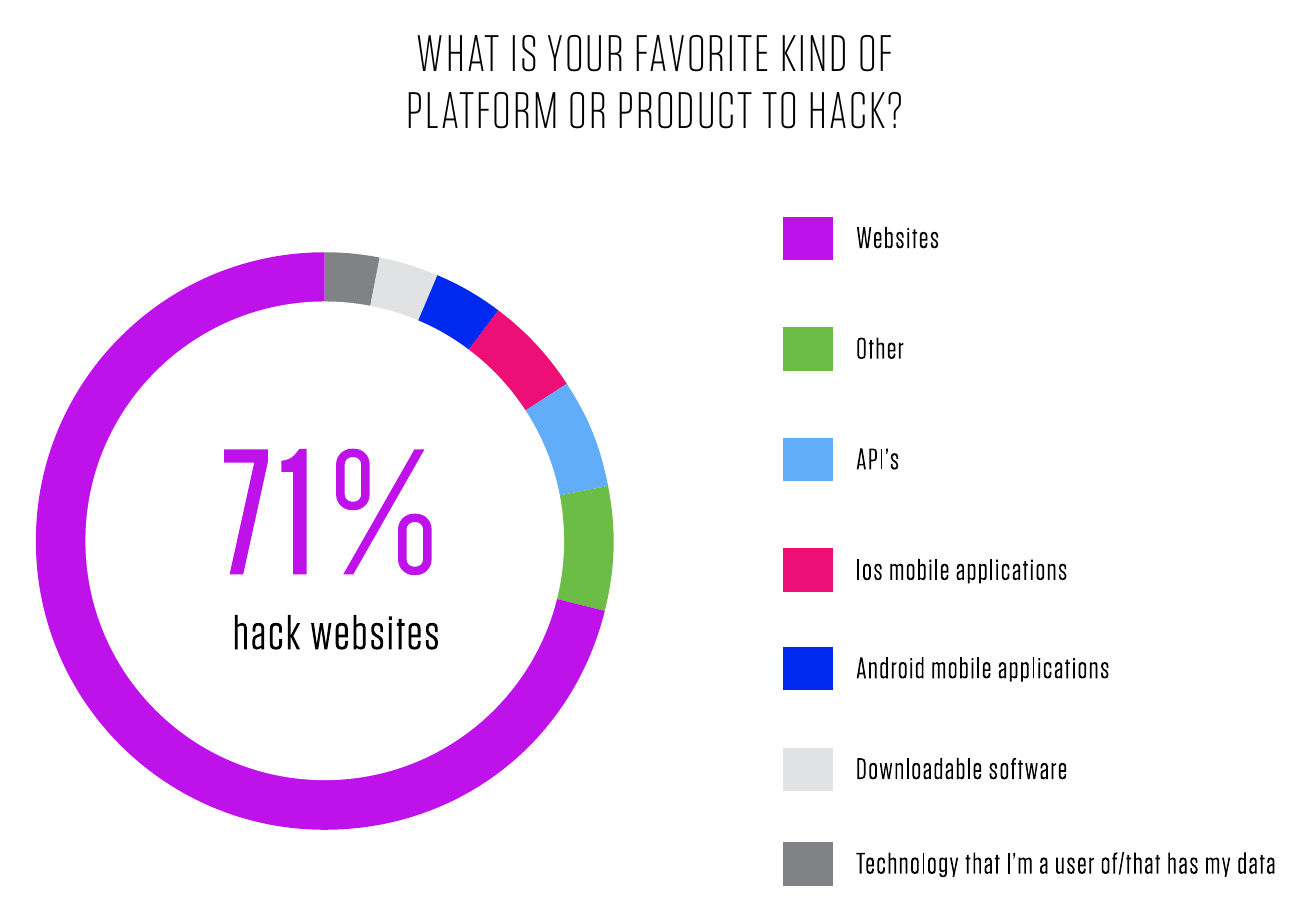

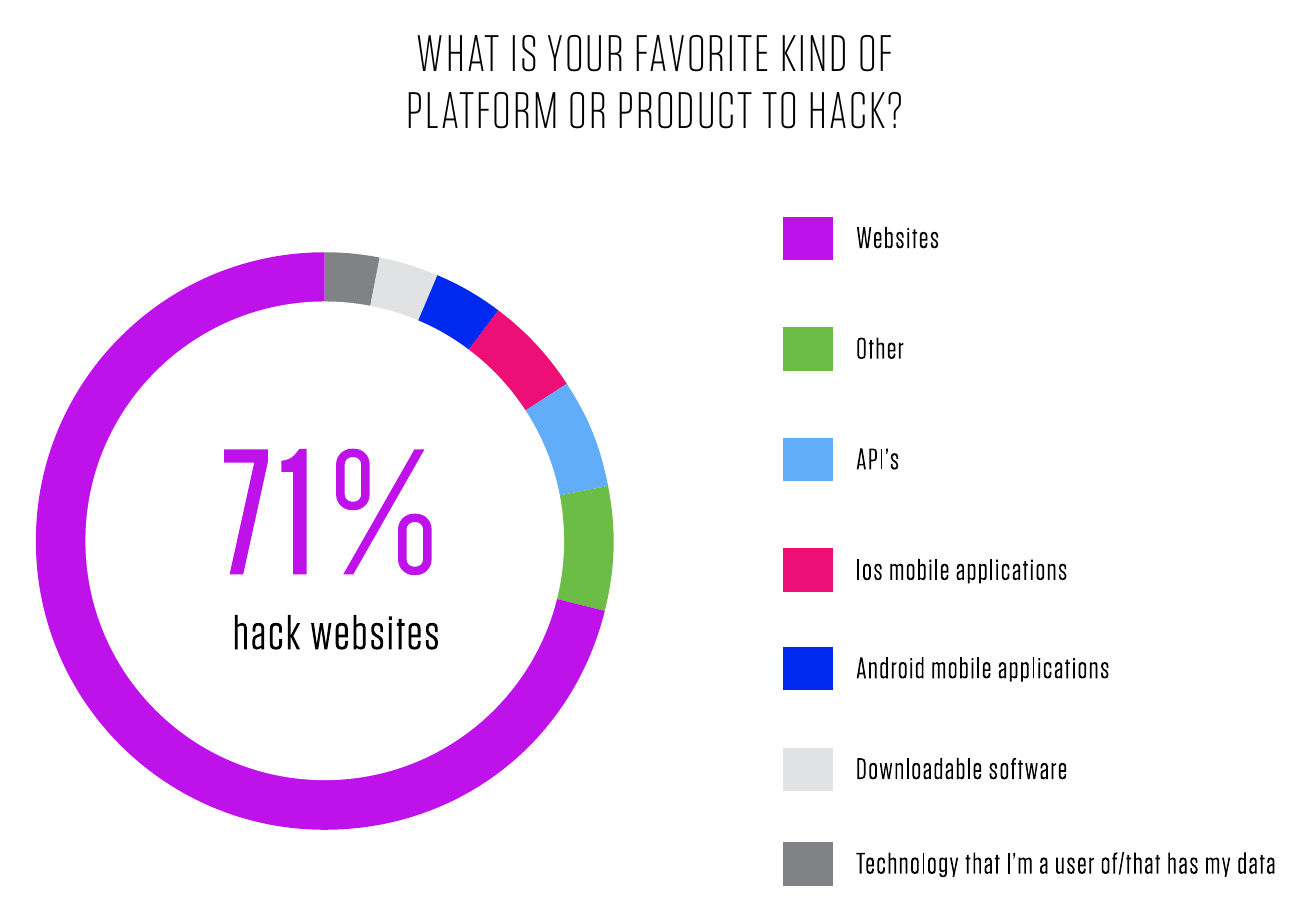

First, let’s look at a graph from HackerOne’s 2020 annual report showing how many hackers on the platform hack mobile applications:

HackerOne 2020 Annual Report

HackerOne 2020 Annual Report

That dark blue sliver, around 3% of the graph, is the amount of interest that exists in Android application security compared to other assets on HackerOne. While the folks looking at web apps spend substantial effort to find undiscovered assets, Android apps sit alone in the corner, unloved. HackerOne even tried to drum up more interest in Android Security with #AndroidHackingMonth blogposts in February 2020, and one researcher who started looking at Android after seeing these blogposts (that’s less than 6 months ago) already scooped up $30k in bounties from a single vulnerability. Let’s check BugCrowd’s 2020 report:

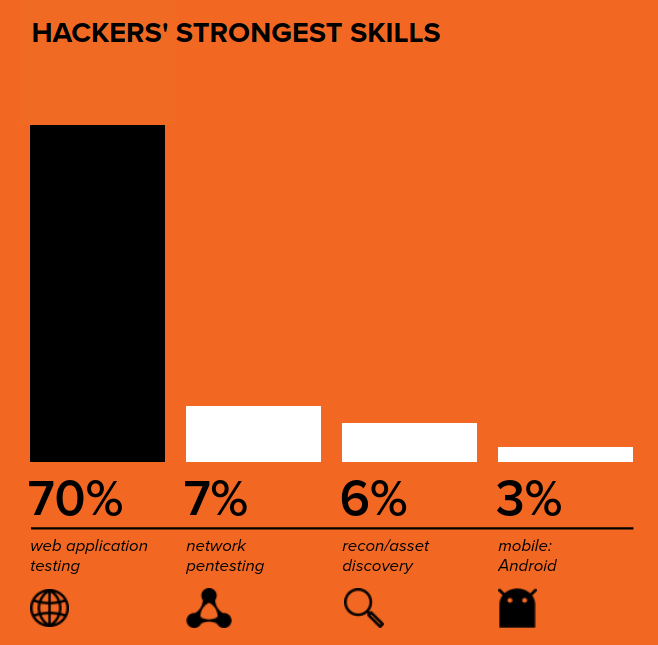

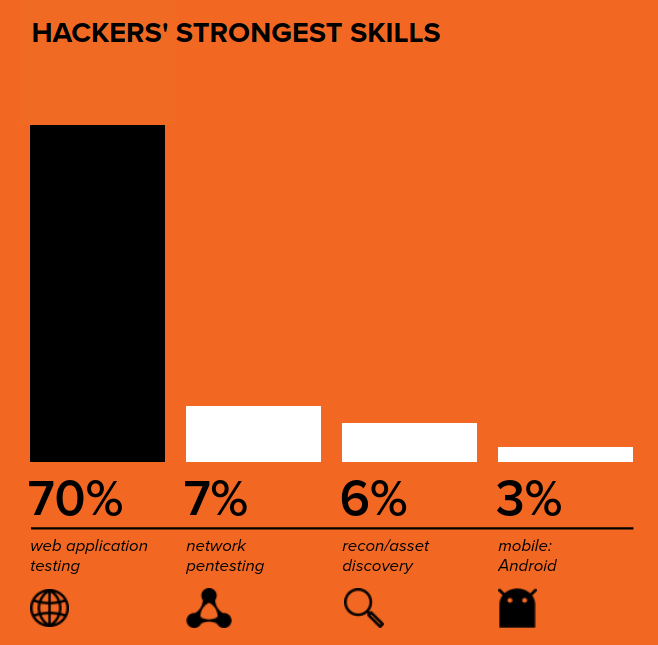

BugCrowd 2020 ITMOAH Report

BugCrowd 2020 ITMOAH Report

Huh, BugCrowd’s graph implies that Android is where hackers are “weakest”. Not much competition there either. Let’s look elsewhere, at Sebastian Porst (Google Play Protect’s engineering manager) presenting at Bugcrowd’s LevelUp 0x05. The two interesting tidbits I picked up on in the 4 minutes of video between minute 7 and minute 11 of this talk are that 1. 75% of the bug bounty payments that Google made on their Google Play Security Reward program occurred in the 90 days before the presentation (so between July and Oct 2019), which means interest was ramping up and 2. The number of vulnerabilities fixed in the 3 years prior to this talk (so 2016-2019) may make the Android app ecosystem security initiative the biggest application security program to date. Conveniently, Sebastian proceeds to outline different bug categories that you can start looking for.

BugCrowd LevelUp 0x05 Android app vulnerabilities presentation

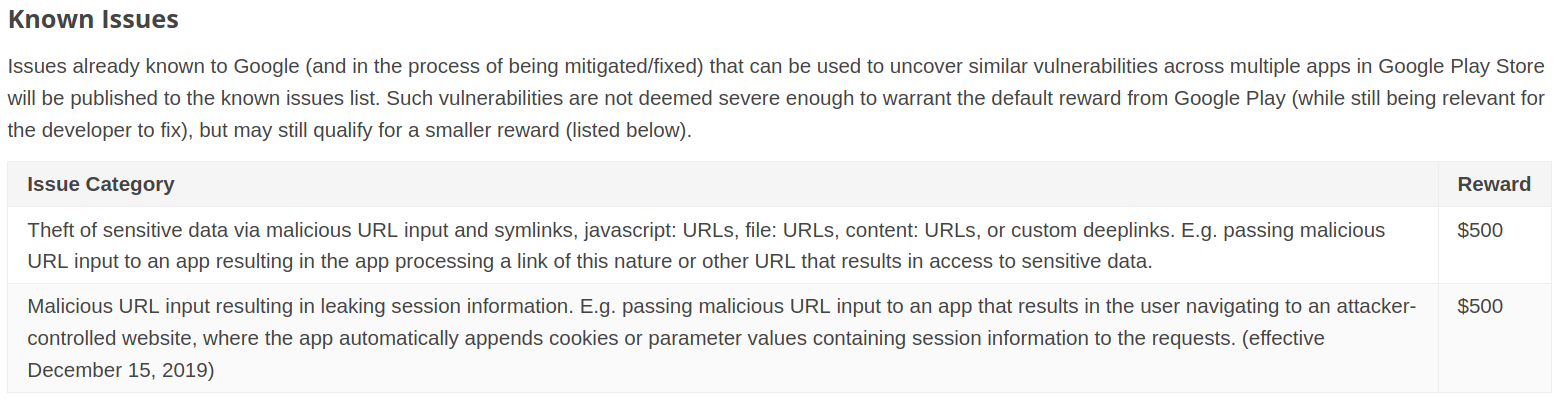

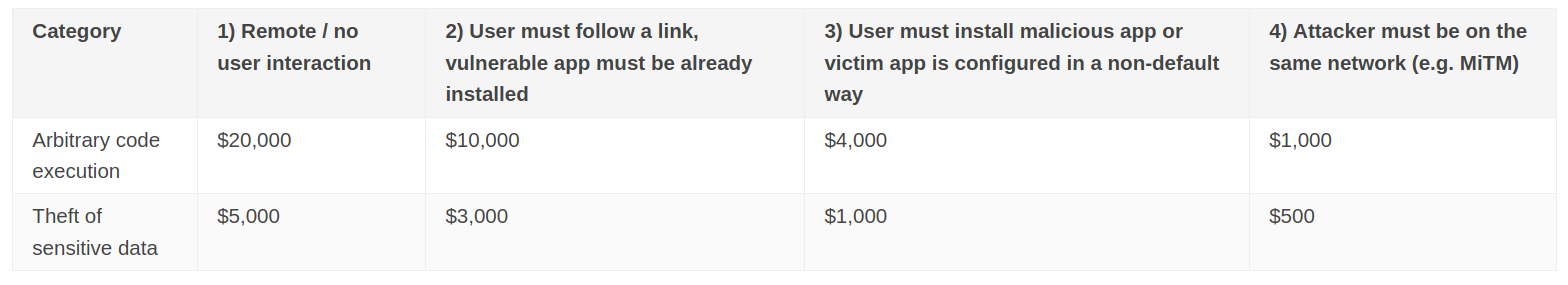

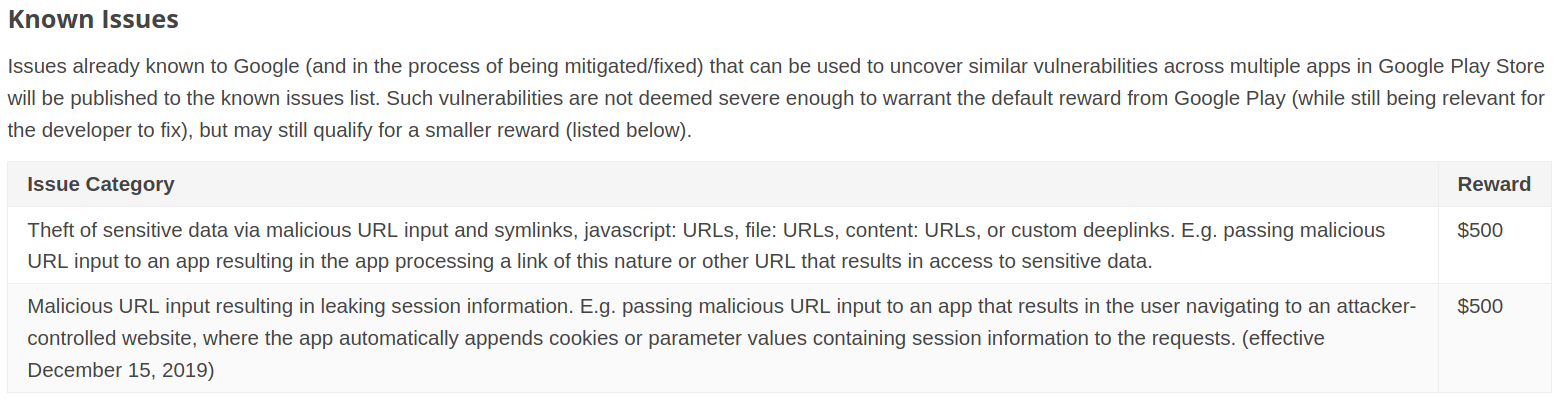

So basically Google’s Android bug bounty program, which covers any app with over 100 million installs (that’s around 500 targets, according to androidrank.org), is known to have many vulnerabilities and pays well. Okay, sounds like that’s motivation enough, but there’s more data from Google. This one comes directly from the Google Play Security Rewards Program website. To summarize the chart below, Google is saying that easy-to-find vulnerabilities exist in Android apps, and instead of classifying them as out of scope, Google instead describes these vulnerabilities and still offers a reward. Very kind of them!

Google Play Security Rewards Known Issues Chart

Google Play Security Rewards Known Issues Chart

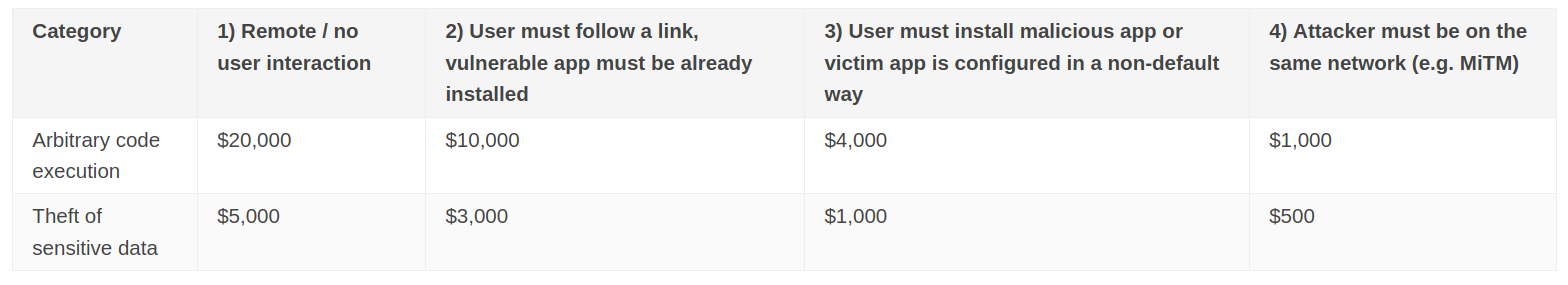

Of course, that chart is only for the low hanging fruit. The more complete list of rewards contains higher rewards, naturally. And remember that HackerOne, BugCrowd, Intigriti, and other sites have additional Android targets, many of which are conveniently curated in this Github repo.

Google Play Security Rewards Chart

Google Play Security Rewards Chart

Oh, and let’s wrap up by reminding everyone that you can download and decompile Android applications, instrument them with Frida on a rooted device, and basically examine all the ins and outs of the application that you might want to see. How many other target assets can claim to be this close to whitebox open-source testing? I don’t see many hands being raised.

Let’s recap: we have many targets, a large number of expected vulnerabilities, decent rewards, not much competition, and open source assets ready for examination. Not to mention that it’s possible to download hundreds of applications and use scripts to statically analyze the applications quickly. I’m sold, and in the coming months, I hope to share more info about my adventures looking at Android application security. If you can’t wait to dive in, take a look at @0xteknogeek’s #AndroidHackingMonth Guide for a decent primer on how to get started.

17 Aug 2020

The first year of virtual DEF CON wrapped up last week, and what a firehose of information it was! I first must admit this was my first DEF CON where I was watching talks live (does watching a recorded talk on Twitch count as live?) as opposed to on YouTube after the event. Overall, I was impressed with how smoothly the event ran (or at least the parts that I saw). One perk of the online conference was that the 30 minute Q&A sessions seemed to move faster and more smoothly than in-person Q&As, and 30 minutes was enough to ask quite a few questions! The five talks that I highlight below are not in any particular order, since picking highlights is always a matter of opinion anyway.

-

I think Joshua is rightfully getting substantial attention for his “When TLS hacks you” talk, as this appears to be a new web security attack. A summary of this new attack surface is SSRF over TLS, but I think the idea of using TLS for web attacks is relatively unexplored in itself. Joshua’s Q&A session was very relaxed and indicated that he hadn’t poked around this new attack surface fully, which leaves a lot of room for others to fill in the gaps and move this further. On a related note, the “Domain Fronting Using TLS 1.3” talk by Erik Hunstad (which also featured novel research!) also found a new way to use TLS, and I appreciate the creativity of these researchers using technology that’s in plain sight in new ways.

-

Having spent some time diving into the depths of the Bluetooth core specification, I have been keeping track of the new Bluetooth-related CVEs that have been popping up over the last several years (summarized in this blog post). After unveiling InternalBlue at 35C3 a couple years ago, Jiska presented with Francesco Gringoli about a creative new attack on Bluetooth/WiFi combo chips. Since space is at a premium in smartphones, and because WiFi and Bluetooth operate at the same frequency, most phones today use combination chips that implement both WiFi and Bluetooth. However, the phone has a clock that coordinates which protocol is able to transmit to avoid interference, and it’s this coordination mechanism that was attacked. Since phones will not be moving away from Bluetooth/WiFi combo chips (because of how compact and cheap they are), this and similar bugs at the intersection of different technologies will continue to be an interesting research area.

-

Server-side template injection (SSTI) was something I first learned about thanks to PortSwigger’s fantastic Web Security Academy, and I suspect it is underrated because many of the findings to date rely on specific old versions of software to be running. However, discovering new vulnerabilities in the latest software versions is one solution to this! Munoz and Mirosh have had other awesome collaborations in the past (Friday the 13th JSON Attacks is worthwhile to read or skim), and this one produced over 30 CVEs - quite a collection. While the CVE in Microsoft Sharepoint seems to be getting a lot of press, there are many other well-known CMS software (HubSpot, Atlassian Confluence) that will likely be seeing the impact of this over the coming months, if they’re not patched.

-

An astronaut. At DEF CON! With cool space photos!! Pam’s talk was lots of fun and gave a lot of cool insider information about experiences and observations based on experience of American spaceflight. To paraphrase one thing Pam said, the security risks are magnified when human lives are involved, and the ISS has people living up there 24/7/365. There’s obviously concerns with GPS security and other satellite hacking (a topic which got a substantially larger amount of interest this year), but this talk offered a perspective on the human element. Combining astronauts, space, and security just seems like a fantastic combo.

-

Pedro Umbelino and Joao Morais presented some awesome vulnerabilities they uncovered in some of the most installed Android apps - the Google camera application and Samsung’s Find My Mobile application. The impact they were able to demonstrate with their findings was pretty impressive, as they put a fair bit of effort into adding capabilities to their proof of concept apps.

BONUS: Let’s face it, security can get pretty technical and no one likes being the dummy in the room. The War Story Bunker was an entertaining audio-only session on Discord where people chimed in with their stories of DEF CON and pen testing experiences. There were many epic wins and epic fails! Are you worried about deleting data during a pentest? Some of this year’s stories described accidentally taking down entire networks over substantial geographic areas! With these crazy stories to compare to, I expect to feel a lot better about any of the (reasonable) mistakes I might make.

Unfortunately, there’s no recording of the audio-only War Story Bunker session that I know of!

HackerOne 2020 Annual Report

HackerOne 2020 Annual Report BugCrowd 2020 ITMOAH Report

BugCrowd 2020 ITMOAH Report Google Play Security Rewards Known Issues Chart

Google Play Security Rewards Known Issues Chart Google Play Security Rewards Chart

Google Play Security Rewards Chart